Predicting the future of AI is a risky sport—especially when the industry seems to reinvent itself every 90 days. But in this TechVoices panel discussion, I spoke with five enterprise leaders who understand the AI market as well as anyone in the rapidly evolving sector.

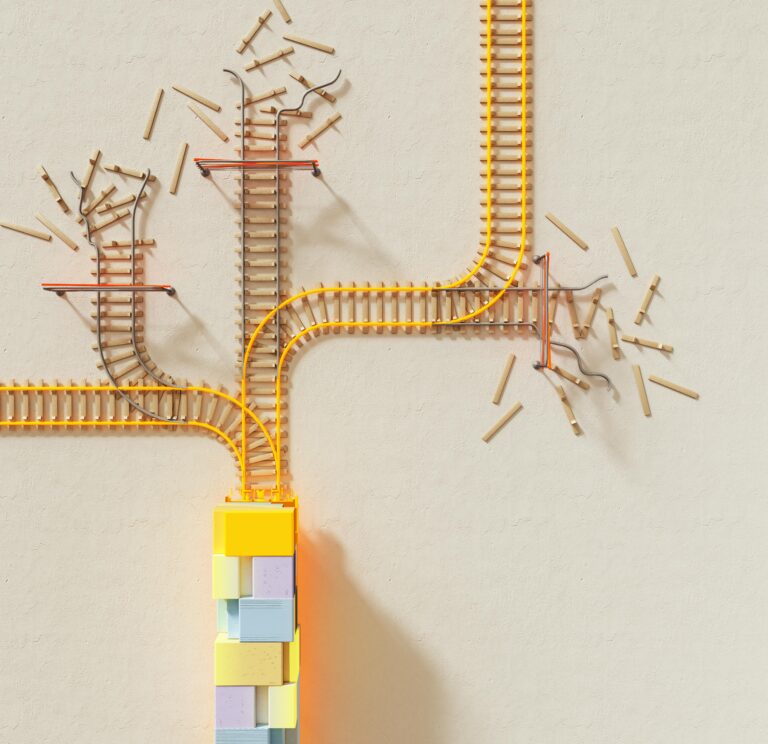

Across the conversation, a shared theme emerged: the AI honeymoon phase is ending, and a more serious era is beginning—one defined by measurable ROI, scalable deployment, better data foundations, and clearer-eyed realism about what agentic AI systems can (and can’t) do today.

The panelists (mostly) converged on a 2026 outlook that’s less about a single breakthrough model and more about an architectural shift: smaller, domain-specific models; AI embedded deeper into systems of record; and the emergence of AI-native companies that redesign work from scratch rather than bolting AI onto old processes.

In short, 2026 won’t be about AI hype—it will be about who can operationalize AI into durable business value.

Core Takeaways

From pilots to production: 2026 is about measurable value, architecture, and redesigning work—not chasing the latest buzzword.

Beena Ammanath, Global Head of Deloitte AI Institute: Enterprises are in different maturity stages depending on the type of AI—traditional AI is thriving, generative AI is maturing into scaled deployments, and agentic AI is largely still in proof-of-concept. Her warning: using agentic AI as a band-aid on old processes wastes its potential; the real opportunity is transformational redesign of roles and workflows around humans and semi-autonomous agents working together.

Chad Dunn, VP, Product Management, AI and Data Management, Dell: Enterprises are getting more sophisticated about ROI—moving beyond labor savings to decision-quality and strategic advantage. He also sees a shift from “applying agents to workflows” toward assuming the agent becomes the primary interface. His guidance to executives: broaden ROI models, embrace hybrid strategies, consider smaller vertical models, and invest in AI literacy.

Ram Chakravarti, CTO, BMC: The market is splitting into haves and have-nots, where deep-pocketed firms push aggressive AI buildouts while spend-constrained companies are more cautious. He expects the “bigger is better” arms race to slow as costs rise and returns diminish—replaced by fit-for-purpose, domain-specific models. He also cautions against vendor “agent-washing” and stresses the organizational shift from deterministic to probabilistic systems.

Nabil Bukhari, President, AI Platforms & CTO, Extreme Networks: The honeymoon is over, but most enterprises are still pre-scale—boards may believe they’re “AI-native,” while operations teams see the messy reality. His key correction is blunt: AI doesn’t create value on its own; value comes from integration into systems of record, workflows, and operating models. His 2026 bet is an inversion of the stack—AI becomes the substrate (core), and enterprises build many small “micro-apps” on top, guided by three priorities: expertise, context, and control.

Sean Kask, Chief AI Strategy Officer, SAP: Adoption metrics are wildly inconsistent, but the underlying pattern is familiar: an organizational learning curve similar to early cloud adoption, including unrealistic legal/procurement demands like guaranteeing probabilistic outputs. He pushed back on the notion that agents “replace SaaS,” arguing that systems of record and explicit process representation remain prerequisites. His executive advice: invest in digital foundations (clean data, harmonized context) and build “real options” by piloting capabilities that let you pivot quickly as models evolve.

Key Quotes

Beena Ammanath on the “band-aid” trap—and why agentic AI demands redesign, not retrofits

“When I say Agentic AI is still in its early phase, what I mean is… this is truly transformational technology. And if we look at it at a use case level or try to do piecemeal use cases or solutions, what’s happening today is you’re applying band-aid on processes and technologies that were built for a different era.”

“I fundamentally think that we need to rethink how we apply this technology and reimagine the entire process or the kind of roles that exist. This is an opportunity to actually use this as a transformational technology and completely reimagine your organization… When agents and humans work together, what does that look like? …That fundamental redesign is not [yet] happening….”

Nabil Bukhari on the biggest misconception: AI doesn’t create value—integration does

“I actually think that the biggest hype is that AI creates value. It actually doesn’t. The application of AI, orchestration of it across your systems, across your operating model and more importantly integration of it in your data systems record or source of record as well as your workflows—that’s what creates value.”

“We’ve all seen news flashes like a 17-year-old who rebuilt a $60 million application in three hours. That has created this kind of hype that AI can solve all of these things on its own… The higher up you go into an organization, the more blind they are… So I think that’s really the biggest hype… the sooner we correct it, the better we can bring everybody down to reality and get to real value.”

Ram Chakravarti on the end of “bigger is better”—and the shift to fit-for-purpose models

“The notion of bigger is better—the current AI landscape is incredibly inefficient. The analogy that I use is it’s like using a sledgehammer to crack a walnut. And I think that in 2026, the AI arms race to build the biggest, best general purpose LLM will slow down…as costs escalate with diminishing returns, and executives start scrutinizing ROI.”

“For me, the future is all about scaling value realization and measurable value… I believe that you will see a significantly greater focus on small domain specific language models that are trained for specific industries or for high precision use cases and can deliver real value at the optimal price point. Simply put…the horse race will change from bigger is better to a fit for purpose approach.”

Sean Kask on why non-LLM foundation models may generate more business value than LLMs in 2026

“I think that more value can be generated by non-LLM based foundation models than the current large language models. If LLMs were frozen tomorrow, we could still generate another five or 10 years of value out of them by applying it. But that’s only one kind of foundation model.”

“We see other kinds emerging now… world models… robotics… and the one that we’re most excited about at SAP is… relational, pre-trained transformer… a foundation model that does mathematical calculations, which large language models cannot do… forecasting demand… supplier risk… delivery date and price… A lot of the value that we get from AI is really in these kind of forecasts and numerical predictions.”

Chad Dunn on the 2026 interface shift: assuming the agent is the primary UI

“Some of the most forward-looking customers… are making that mental shift away from, ‘Hey, let’s apply agent AI to a workflow that we have,’ versus ‘let’s assume that the primary interface is an agent.’ …I think we’re seeing a little bit of that now. I think we’re going to see a lot of that in the coming year.”

“You’re going to have companies that are born in AI… not just applying AI to a workflow, but building those workflows with the assumption of AI… the assumption is the agent is the interface, not our traditional interfaces… many of the human interfaces that we’re used to will simply go away and be replaced by an agent interface.”